This MIT Technology Review headline caught my eye, and I think you understand why. They described a new type of exploit called prompt injection.

This MIT Technology Review headline caught my eye, and I think you understand why. They described a new type of exploit called prompt injection.

Melissa Heikkilä wrote: "I just published a story that sets out some of the ways AI language models can be misused. I have some bad news: It’s stupidly easy, it requires no programming skills, and there are no known fixes.

"For example, for a type of attack called indirect prompt injection, all you need to do is hide a prompt in a cleverly crafted message on a website or in an email, in white text that (against a white background) is not visible to the human eye. Once you’ve done that, you can order the AI model to do what you want."

This kind of exploit looks at the near future where users will have various Generative AI plugins that function as personal assistants.

The recipe for disaster rolls out as follows: The attacker injects a malicious prompt in an email that an AI-powered virtual assistant opens. The attacker’s prompt asks the virtual assistant to something malicious like spreading the attack like a worm, invisible to the human eye. And then there are risks like the recent AI jailbreaking and of course the known risk of data poisoning.

The AI community is aware of these problems but there are currently no good fixes.

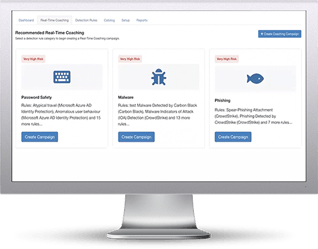

All the more reason to step your users through new-school security awareness training combined with frequent social engineering tests, and ideally reinforced by real-time coaching based on the logs from your existing security stack.

Full article here: