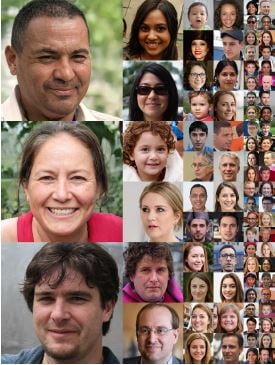

This starts to be more than a bit concerning. The faces in this post look like pretty normal humans. They could be social media shots. However, they were generated by a recent type of algorithm: generative adversarial network, or GAN.

Nvidia researchers Tero Karras, Samuli Laine, and Timo Aila posted details of the method to produce completely imaginary fake faces with stunning, almost eerie, realism (here’s the paper).

GANs employ two "dueling" neural networks to train a model to learn the nature of a dataset well enough to generate convincing fakes. When you apply GANs to images, this provides a way to generate often highly realistic still fakes you could use for extremely hard to detect social engineering attacks, especially combined with deep fake videos.

You can now generate a completely synthetic human face, map it on top of a video and make it say what you want, all using recent algos. See this brand new video doing full body synthesis which illustrates the problem.

The only thing lacking is equip that construct with Natural Language Processing (NLP) and a high-quality real-time back-end engine and you have your first evil AI that can scam untrained users into revealing their user name and password in one minute. Yikes.

Here is the new NVIDIA video (YouTube) that shows how GANs are able to do this without any human supervision...

Unfortunately, it's not getting any better on the net. More than ever your users need to be on their toes with security top of mind.