New testimony to U.S. Senate Armed Services Committee Subcommittee on Cybersecurity by Microsoft’s Chief Scientific Officer sheds light on AI-powered cyberattacks.

New testimony to U.S. Senate Armed Services Committee Subcommittee on Cybersecurity by Microsoft’s Chief Scientific Officer sheds light on AI-powered cyberattacks.

Cyberattacks are a continually-developing process of threat actors and gangs learning from their successes and failures to identify new ways to ensure their malicious outcomes. Security vendors have been using artificial intelligence (AI) as part of solutions to identify attacks, threats, malware, and malicious activity for a number of years. But, according to new testimony from Microsoft’s Eric Horvitz, cybercriminals have been quietly developing ways to leverage AI to improve their chances of successful attack.

In Horvitz’s blog about his testimony, AI is being used in a number of ways:

- To scale cyberattacks using various forms of probing and automation

- AI-powered social engineering that optimizes the timing and content of messages – including translations of malicious email messages

- The “discovery of vulnerabilities, exploiting development and targeting, and malware adaptation, as well as in methods and tools that can be used to target vulnerabilities in Al-enabled systems”

- Adversarial AI used to poison the data used by security solutions with the intent of changing the behavior of security solutions that use AI

This next generation of malicious AI will no doubt improve the cybercriminal’s chances of succeeding at each phase of an attack – whether that be the initial attack vector (like phishing), compromising endpoints, lateral movement, and more.

To stay ahead of AI-based cyberattacks, Horvitz recommends:

- Improved cyber hygiene, including MFA, backups, patch management, incident response plans, and more

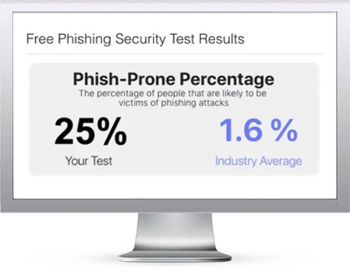

- Security Awareness Training for the U.S. workforce so the user themselves can play a role in trying to stop these evolving attack techniques by sticking to solid corporate policies that dictate how to respond to requests that involve any financially-related transaction.

Horvitz summarizes his testimony by saying “Significant investments in workforce training, monitoring, engineering, and core R&D will be needed to understand, develop, and operationalize defenses for the breadth of risks we can expect with AI-powered cyberattacks.” It’s a warning every organization today should heed.

Here's how it works:

Here's how it works: