In emergency healthcare settings, the “golden hour” is the time between when a patient suffering a life threatening event (e.g., heart attack, stroke, aneurysm, etc.) is most likely to recover with the best possible outcome if treated within a certain period of time by the appropriate therapies. Healthcare workers wishing to best help the most people are taught they need to quickly diagnose the right illness or injury and begin the right healing therapies. Every minute of delay further risks a patient’s positive outcome.

In emergency healthcare settings, the “golden hour” is the time between when a patient suffering a life threatening event (e.g., heart attack, stroke, aneurysm, etc.) is most likely to recover with the best possible outcome if treated within a certain period of time by the appropriate therapies. Healthcare workers wishing to best help the most people are taught they need to quickly diagnose the right illness or injury and begin the right healing therapies. Every minute of delay further risks a patient’s positive outcome.

Cybersecurity researchers have applied the same idea to phishing attacks in their recent whitepaper entitled, Sunrise to Sunset: Analyzing the End-to-end Life Cycle and Effectiveness of Phishing Attacks at Scale. Looking at the behavior of 4.8 million successful phishing attack victims who got tricked into visiting over 400,000 unique phishing URLs, they gleaned some interesting facts, including:

- The average phishing campaign from start to the last victim takes just 21 hours

- On average, it takes anti-phishing entities nine hours to detect and start to respond to a new phishing attack

- At least 7.42% of visitors supply their credentials and ultimately experience a compromise and subsequent fraudulent transaction

- Ten percent of phishing campaigns are responsible for 89% of victims

I get sent numerous “research” papers and studies every day. Most are a waste of my time. They don’t say anything new and they don’t include verified data from real attacks. This paper is the opposite of that and I’m glad I took the time to read it. The authors, researchers with Arizona State University, PayPal, Google, and Samsung, refer to the period of time between first detection (nine hours) to the shutdown of the involved phishing campaign (21 hours) as the “golden hours”, where defenders potentially had the capability to take down a phishing threat to the point in time when it finally did get taken down. On average, it takes phishing mitigations 12 hours to put down a new phishing threat. That 12-hour gap is the “golden hours” for both hackers and defenders -- nearly 38% of victim traffic to a phishing website and over 7% of successfully phished victims occurred during the golden hours. If defensive mitigations were more proactive, we would have less victims.

Key takeaway - how to shrink the phishing golden hours

One of the key recommendations of the paper is for more aggressive and smarter anti-phishing mitigations to begin as soon as the phishing attack is launched and first detected before it has a time to claim victims (i.e., minimize the golden hours). This isn’t actually a new thought. It’s every defender’s goal. But I think the paper did a good job at breaking the phishing lifecycle into discrete phases; better than I’ve seen elsewhere, and then offered actionable ways to shrink the golden hours.

One of their unique suggestions comes from noting that phishing emails often have lots of legitimate content that originate from the real vendor’s website whom they are impersonating. Often times, everything in a phishing email points to the real (impersonated) vendor’s content, except for the one URL that the victim is being coaxed to click on. It’s a very common tactic, giving fake phishing emails a feeling of legitimacy. The paper’s authors ask for impersonated brands to look out for suspicious linking to their legitimate content. Certainly, the impersonated brands could easily, if only they looked for it, alert on sudden increases in traffic from domains and locations they don’t officially partner with. The paper’s authors ask impersonated brands to quickly research those suspicious links and more quickly report them to anti-phishing sites for faster takedown.

That reminds me of a similar real-life story a few years ago where a phisher was impersonating a bank. The bank noticed the malicious linkage fairly quickly in the phishing lifecycle and simply renamed one of the images that the phisher’s email was linking to and updated their own legitimate links to point to the new name. They then created new content that pushed an image to any potential victims which warned that the involved email was a phishing attack and recommended that they take the appropriate actions and cautions. It was brilliant! They used the phisher’s own email against them and put down the attack very quickly and early on.

Another recommendation is for “known visitors”, people who have been detected as successfully falling for the phishing attack, to be identified and proactively reported to their involved organization or domain so that entity can be proactive in helping to better protect the victim and the involved organization. Imagine being a sysadmin and getting real-time reports of who in your organization has fallen victim to a phishing attack and being able to quickly reset their passwords reset get their originating device cleaned and secured…proactively. Dare to dream!

Limits to recommended approach

The authors are very clear on the limits of their approaches and what they can and cannot do. No phishing mitigation is 100% effective in mitigating all phishing. In fact, every policy and technical phishing mitigation you can put in place all together will fail at least some of the time. It’s guaranteed to happen. That’s why it’s a must that your users be trained in how to spot social engineering attacks and how to treat them (which is hopefully to report and delete them). You want to put the best possible combinations of policy, technical, and training mitigations to prevent, detect, and respond to phishing attacks.

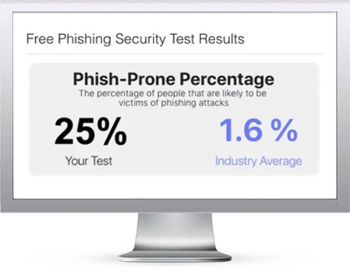

Users that undergo continual Security Awareness Training are better prepared for when (not if) a malicious phishing email reaches their Inbox. This training helps users understand the need for vigilance when interacting with potentially harmful emails and educates them on how to identify suspicious or malicious content that may be the starting point for an attack.

If you fight phishing, I think you should definitely download and read this whitepaper. It’s one of the best.

Here's how it works:

Here's how it works: