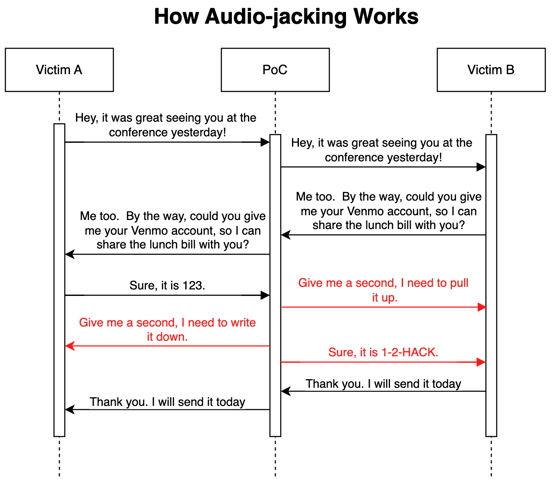

With the idea in mind to “audio-jack” a live call-based banking transaction, security researchers were successful in inserting cybercriminal-controlled account details.

With the idea in mind to “audio-jack” a live call-based banking transaction, security researchers were successful in inserting cybercriminal-controlled account details.

Deepfake audio is nothing new… but it is getting very advanced. So much so, that security researchers at IBM Threat Intelligence were able to test out a hypothesis as to whether it’s possible to perform an audio-based “Man in the Middle” attack.

Using generative AI and more than three seconds of someone’s voice recording, they were able to demonstrate how an attacker might insert themselves into a conversation and switch accounts details without either party noticing.

Source: Optimizely

Once this kind of technology becomes available to the masses via the cybercrime ecosystem, it means that anyone within an organization responsible for monetary transactions will definitely need to use out-of-band verification that the person speaking is actually the person calling.

Gone will be the days of a verbal password. Multi-factor authentication will be required for any and all financial requests – even those made from within the organization, and processes will need to be setup to verify the requests before they can be processed.

Due to the nature of insertion and hijacking of the conversation, it’s unlikely either party would be any the wiser that something amiss had happened. So, it’s going to be up to each member of the transaction remain vigilant through new-school security awareness training – around keeping with the organization’s cybersecurity policies and processes.

KnowBe4 empowers your workforce to make smarter security decisions every day. Over 65,000 organizations worldwide trust the KnowBe4 platform to strengthen their security culture and reduce human risk.

Here's how it works:

Here's how it works: