Threat actors continue to use generative AI tools to craft convincing social engineering attacks, according to Glory Kaburu at Cryptopolitan.

Threat actors continue to use generative AI tools to craft convincing social engineering attacks, according to Glory Kaburu at Cryptopolitan.

“In the past, poorly worded or grammatically incorrect emails were often telltale signs of phishing attempts,” Kaburu writes.

“Cybersecurity awareness training emphasized identifying such anomalies to thwart potential threats. However, the emergence of ChatGPT has changed the game. Even those with limited English proficiency can now create flawless, convincing messages in perfect English, making it increasingly challenging to detect social engineering attempts."

Legitimate AI tools like ChatGPT attempt to curb malicious results, but threat actors can often find ways around these rules.

“OpenAI has implemented some safeguards in ChatGPT to prevent misuse, but these barriers are not insurmountable, especially for social engineering purposes,” Kaburu says. “Malicious actors can instruct ChatGPT to generate scam emails, which can then be sent with malicious links or requests attached. The process is remarkably efficient, with ChatGPT quickly producing emails like a professional, as demonstrated in a sample email created on request.”

Threat actors can also use AI-generated voice messages to supplement their attacks.

“While ChatGPT primarily focuses on written communication, other AI tools can generate lifelike spoken words that mimic specific individuals,” Kaburu writes. “This voice-mimicking capability opens the door to phone calls that convincingly imitate high-profile figures. This two-pronged approach—credible emails followed by voice calls—adds a layer of deception to social engineering attacks.”

Kabaru offers the following recommendations to help users avoid falling for AI-generated social engineering attacks:

- “Incorporate AI-generated content in phishing simulations to familiarize employees with AI-generated communication styles."

- “Integrate generative AI awareness training into cybersecurity programs, highlighting how ChatGPT and similar tools can be exploited."

- “Employ AI-based cybersecurity tools that leverage machine learning and natural language processing to detect threats and flag suspicious communications for human review."

- “Utilize ChatGPT-based tools to identify emails written by generative AI, adding an extra layer of security."

- “Always verify the authenticity of senders in emails, chats, and texts."

- “Maintain open communication with industry peers and stay informed about emerging scams."

- “Embrace a zero-trust approach to cybersecurity, assuming threats may come from internal and external sources.”

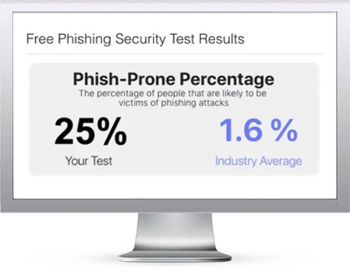

KnowBe4 enables your workforce to make smarter security decisions every day. Over 65,000 organizations worldwide trust the KnowBe4 platform to strengthen their security culture and reduce human risk.

Cryptopolitan has the story.

Here's how it works:

Here's how it works: