The current landscape of artificial intelligence (AI) bears a striking resemblance to the early days of the internet. Just as the internet was once a wild, untamed frontier full of promise and potential, AI now stands at a similar crossroads.

The current landscape of artificial intelligence (AI) bears a striking resemblance to the early days of the internet. Just as the internet was once a wild, untamed frontier full of promise and potential, AI now stands at a similar crossroads.

The excitement surrounding AI is palpable. However, this enthusiasm is tempered by the knowledge that, left unregulated, AI could be misused or lead to catastrophic errors, much like a powerful tool in the hands of an untrained user.

The concentration of power in the hands of a few organizations is a looming threat, like a dark cloud on the horizon. If a select few gain a monopoly over AI, they could introduce biases and jeopardize individuals' privacy, much like a puppet master pulling the strings behind the scenes. The consequences of such a scenario could be far-reaching, affecting every aspect of our lives.

It's crucial to recognise that AI is not a replacement for human decision-making, but rather a collaborative tool, like a trusty sidekick. We must view AI as an augmentation of our abilities, not a substitute. The temptation to let AI make wholesale decisions on our behalf is like the siren's call, luring us towards the rocks of unintended consequences.

The AI Act

The introduction of the AI Act in the European Union and its associated laws is a beacon of hope in this uncertain landscape. By establishing a framework that safeguards people while allowing AI to flourish, the legislation acts as a guiding light, illuminating the path forward. The risk-based approach outlined in the Act is like a safety net, ensuring that the strictest rules apply to the AI systems with the highest potential for harm.

The establishment of governing bodies, such as the AI Office within the Commission and the AI Board with member states' representatives, is like the foundation of a sturdy building. These entities will play a crucial role in enforcing the common rules across the EU and ensuring a consistent and effective application of the AI Act, much like the pillars that support a structure.

As we navigate this brave new world of AI, we must proceed with caution and be vigilant in our efforts to prevent the misuse of this powerful technology. We must ensure that it is developed and deployed in a manner that respects fundamental rights and promotes the greater good.

The AI Act is a significant milestone in this journey, like a compass pointing us in the right direction. By striking the right balance between innovation and regulation, the AI Act serves as a model for other nations to follow.

The AI Act and Security Awareness Training

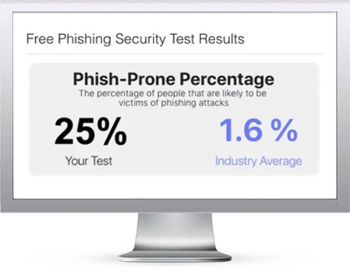

The rise of AI presents both opportunities and challenges for security awareness training. On one hand, AI-powered tools can enhance the effectiveness of training programs by personalizing content, adapting to individual learning styles, and providing real-time feedback.

This can lead to more engaging and impactful training sessions, ultimately mitigating the human risk that exists within organizations. However, the flip side is that cybercriminals are also leveraging AI to create more sophisticated and convincing phishing attacks, making it harder for employees to detect and avoid falling victim to these scams.

The AI Act, while a step in the right direction, may have limited impact on curbing the misuse of AI by cybercriminals, as they operate outside the boundaries of legal frameworks. Therefore, it is crucial for security awareness training to evolve alongside the threat landscape, incorporating AI-driven simulations and emphasizing critical thinking skills to empower employees to navigate the increasingly complex world of cyber threats.

Here's how it works:

Here's how it works: