A new study found that ChatGPT can accurately recall any sensitive information fed to it as part of a query at a later date without controls in place to protect who can retrieve it.

A new study found that ChatGPT can accurately recall any sensitive information fed to it as part of a query at a later date without controls in place to protect who can retrieve it.

The frenzy to take advantage of ChatGPT and other AI platforms like it has likely caused some to feed it plenty of corporate data in an effort to have the AI process and provide insightful output based on the queries received.

The question becomes who can see that data. In 2021, a research paper published at Cornell University looked at how easily “training” data could be extracted from what was then ChatGPT-2. And according to data detection vendor Cyberhaven, nearly 10% of employees have used ChatGPT in the workplace, with slightly less than half pasting confidential data into the AI engine.

Cyberhaven go on to provide the simplest of examples to demonstrate how easily the combination of ChatGPT and sensitive data could go awry:

A doctor inputs a patient’s name and details of their condition into ChatGPT to have it draft a letter to the patient’s insurance company justifying the need for a medical procedure. In the future, if a third party asks ChatGPT “what medical problem does [patient name] have?” ChatGPT could answer based what the doctor provided.

Organizations need to be aware of how cybercriminals could misuse any data fed into such AI engines – or even create a scam that pretends to be ChatGPT to some degree. These outlier risks are just as big a threat as phishing attacks, which is why every user within the organization should be enrolled in security awareness training in order to start your journey towards a security-centric culture within the organization.

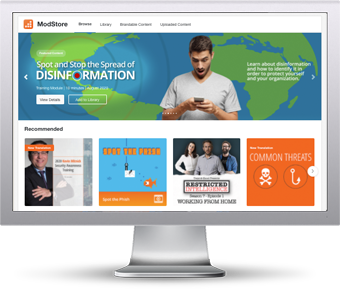

The ModStore Preview includes:

The ModStore Preview includes: