Poker players and other human lie detectors look for “tells,” that is, a sign by which someone might unwittingly or involuntarily reveal what they know, or what they intend to do. A cardplayer yawns when he’s about to bluff, for example, or someone’s pupils dilate when they’ve successfully drawn a winning card.

Poker players and other human lie detectors look for “tells,” that is, a sign by which someone might unwittingly or involuntarily reveal what they know, or what they intend to do. A cardplayer yawns when he’s about to bluff, for example, or someone’s pupils dilate when they’ve successfully drawn a winning card.

It seems that artificial intelligence (AI) has its tells as well, at least for now, and some of them have become so obvious and so well known that they’ve become internet memes. ”ChatGPT and GPT-4 are already flooding the internet with AI-generated content in places famous for hastily written inauthentic content: Amazon user reviews and Twitter,” Vice’s Motherboard observes, and there are some ways of interacting with the AI that lead it into betraying itself for what it is. “When you ask ChatGPT to do something it’s not supposed to do, it returns several common phrases. When I asked ChatGPT to tell me a dark joke, it apologized: ‘As an AI language model, I cannot generate inappropriate or offensive content,’ it said. Those two phrases, ‘as an AI language model’ and ‘I cannot generate inappropriate content,’ recur so frequently in ChatGPT generated content that they’ve become memes.”

That happy state of easy detection, however, is unlikely to endure. As Motherboard points out, these tells are a feature of “lazily executed” AI. With a little more care and attention, they’ll grow more persuasive.

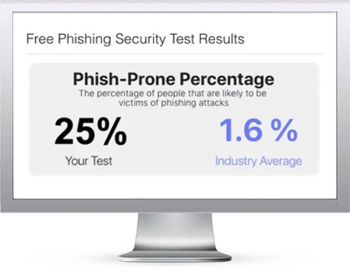

One risk of the AI language models is that they can be adapted to perform social engineering at scale. In the near term, new school security awareness training can help alert your people to the tells of automated scamming. And in the longer term, that training will adapt and keep pace with the threat as it evolves.

Vice has the story.

Here's how it works:

Here's how it works: