Social engineering and phishing happen when a con artist communicates a fraudulent message pretending to be a person or organization which a potential victim might trust in order to get the victim to reveal private information (e.g. a password or document) or perform another desired action (e.g. run a Trojan Horse malware program) that is against the victim’s or their organization’s best interest. Most are quick flights of fancy. One email, one rogue URL link, one phone call. The fraudster is counting on the victim’s immediate response as key to the success of the phishing campaign. The longer the potential victim takes to respond the less likely they are to fall for the criminal scheme.

Social engineering and phishing happen when a con artist communicates a fraudulent message pretending to be a person or organization which a potential victim might trust in order to get the victim to reveal private information (e.g. a password or document) or perform another desired action (e.g. run a Trojan Horse malware program) that is against the victim’s or their organization’s best interest. Most are quick flights of fancy. One email, one rogue URL link, one phone call. The fraudster is counting on the victim’s immediate response as key to the success of the phishing campaign. The longer the potential victim takes to respond the less likely they are to fall for the criminal scheme.

But there is another version of social engineering and phishing that relies on a longer length of time and requires multiple actions by the victim to be successful. There are many sophisticated hackers who intentionally spend weeks or months building up rapport with a potential victim, creating a trusted relationship over time that is eventually taken advantage of. These long-term cons can often be more devastating to the interests of the victim. Everyone needs to be aware of these types of phishing events, because, although they are far rarer, they do happen. Awareness is the key to fighting them. Let’s take a closer look at how they come to be, examples of long-term con scams and what we can do to better protect ourselves, our teams and our organizations.

Pretexting

The common description of these longer-term cons often involves pretexting, which is the act of creating an invented scenario in order to persuade a targeted victim to release information or perform some action. Pretexting can also be used to impersonate people in certain jobs or roles, such as technical support or law enforcement, to obtain information. It usually takes some back-and-forth dialogue either through email, text or the phone. It is focused on acquiring information directly from the actions taken by the targets, who are usually in high-risk departments such as HR or Finance.

In long-term con scams, scammers don’t hurry the pretexting. A social engineer may call a potential victim, say someone in accounts payable, and introduce themselves as their new contact point for such-and-such company, who the accounts payable person regularly pays invoices to. But instead of asking that the accounts payable person to immediately pay a new invoice to a new bank as most business email compromise scams do (which would rightly raise considerable suspicion) the phisher will casually bring up the person’s name that the accounts payable person previously dealt with give a plausible reason they have moved on, such as a promotion.

After establishing themselves as the new contact, they will lay the groundwork for changes that might happen in the future. For example, might give a sob story such as, “And our new boss is bringing in a new accounting system at the same time, so we’re all having to learn new tasks and a new system at the same time. Can you believe that? Like my job isn’t hard enough. And I hear he’s also thinking about switching to a new bank with better interest rates that he used to use at his previous job. I swear every new boss ends up bringing the system from their last job and its up to us to learn everything new. But for now, nothing changes. Just keep paying the invoice to the same place you always have been. I’ll send you the updated information when we get it. Thanks for your patience.” And just like that the hacker establishes a foothold of trust by not asking for any immediate transaction or change to occur, thereby removing initial suspicions, and starting the beginnings of a new relationship.

Compromising IT Security Researchers

The risk of the long-term phishing scam came rushing back with the reports of the latest story, where a very sophisticated campaign by North Korea was launched against multiple security researchers. The scammers created fake identities, Twitter profiles, YouTube videos, and research blogs. They not only posted their own “original” research (which turned out to be fake or rehashes of other expert’s discoveries), but were successful in getting other real people to write new articles for their blogs and Twitter accounts. All the information and postings were re-amplified through the other fake identities and blogs, along with real, unsuspecting researchers, adding the sphere of legitimacy to the fraudulent identities and content.

After gaining the trust of respected security researchers the fraudsters would send them Trojan Horse-poisoned Microsoft Visual Studio Project files as part of a supposed vulnerability collaboration effort. The victimized security researchers would then unknowingly install Trojan Horse code that compromised their own devices, organizations, and information. Other times, simply visiting the fake researcher’s blog appears to have installed malware on the legitimate researcher’s fully patched computers. The attackers then could access and see what the legitimate researchers were working on. This is pretty incredible access as many researchers are often aware of dozens to hundreds of unannounced vulnerabilities. An attacker learning about these unannounced vulnerabilities could, at the very least, become aware when their own real-life attacks were starting to be noticed. A more dangerous scenario is that they could use the “0-days” against any organization with the involved software.

You can read more about this fascinating, true-life scenario. This blog article recounts the maliciousness in more detail and lists the Twitter and blog links involved. There is more information in this article as well by the Register.

The North Korean long-term con is obviously a nation-state attack, as was their widely successful attack against Sony Pictures in 2014. The 2014 attack woke up every company to worrying about nation-state level attacks. Before that attack, most companies only worried about sophisticated, well-resourced, attacks by nation-states if they were in the national defense game. Once Sony Pictures’ emails were outed, every company realized they could be successfully targeted by a nation-state without realizing they had incidentally offended some other country. It was a wakeup call. This campaign targeting security researchers is another.

Pretty Good Privacy Scam

The security researcher example is startling, but it is not new. I remember back in the 1990’s when a computer security reporter decided to prove that security researchers could be scammed, especially if they relied upon Pretty Good Privacy (PGP) digital keys to establish trust. Back then, PGP was considered to be a gold standard of privacy and identification. Anyone could create a PGP public/private key pair and then use the recipient’s public key to send encrypted messages. The recipient would send their PGP key to the sender as needed, or could be stored on distributed, public, PGP key servers, to be downloaded at-will when needed. Unlike the Public Key Infrastructure (PKI) model, PGP doesn’t have an inherent third party to verify the identity of the user before signing the user’s keys (creating a digital certificate). The best PGP could do is to have other PGP-users attest to and verify that a participating sender was who they said they were by using their (unverified or verified) PGP keys to sign the other person’s keys. It was sort of like an SAT math prep question: If A trust B and B trusts C then A can trust C. The issue here was that few people involved in the key verification process really went out of their way to verify anyone or any key.

To reveal that the whole digital key trust mechanism of PGP was flawed and built upon weak and unverified assumptions, the security reporter created a fake identity, that of a beautiful female security researcher. There were not too many of those back in the day, so “her” presence was sure to attract a lot of attention. This female persona created PGP keys and began to correspond with dozens of internationally-recognized security researchers, including names you would still recognize as authorities today. “She” gained the trust of many of those researchers over time by simply participating in online conversations and appearing interested in their research. Over time, she was referred by one respected researcher to other respected researchers and “her” PGP key was signed and/or verified by trusted researchers to one another.

In the end the reporter revealed the scam and fake persona and shared that he had been able to gain access to many otherwise secret security research data and reports. It was similar to the North Korean scam, but with PGP keys instead of Twitter and YouTube (which did not yet exist). The reporter’s revelation blew the doors off the “security” of PGP keys and revealed that security researchers could be just as easily fooled by digital Mata Hari’s as their real-world counterparts. Turns out flattery (and the hint of sexual innuendo in some instances) works as well to allay suspicions now as it did in the 1990’s or during World War I. A big part these spying scams is their long-term play. The slower and longer a scammer plays the game the more likely they are to gain real trust. Time appears to be a big advantage to phishers when the scam is done right.

Scams Can Involve Real Companies

Surely one of the biggest and most financially-damaging phishing scams was that of a single person who successfully phished more than $120M from the likes of Facebook and Google over a three-year period. The scammer, Evaldas Rimasauskas, was able to successful convince very sophisticated and knowledgeable accounts payable clerks and executives that he was the new contact point for their ongoing personal computer buys. To that end he opened up real, incorporated companies with identical names to the real, spoofed companies (incorporated in different countries) and created look-a-like domain names.

Rimasauskas was eventually arrested, ironically due to his advanced paper trail, and sentenced to 5 years in prison. It was another example of an audacious scam conducted over several years, which fooled the most advanced and prepared targets. I remember thinking at the time of his arrest and identification, “If Google and Facebook can be scammed, what hope do the rest of us have?” Turns out all we need is awareness of these types of scams, training, and polices.

Defenses

Awareness of these types of threats is the first defense. The best thing you can do to avoid these scams is to make sure any employee with access to sensitive information (financial data, research, etc.) knows and understands these types of scams.. Share this article and others like it. People must be aware that not all phishing scams are singular emails asking for an immediate action to happen. That’s step one.

Step two is to make people aware that email, texting, and phone calls are not definitive authentication. A phone call or text can come from anywhere. Even if the sender or caller is not spoofing the phone number (or short number) involved, unless the phone number is previously known to the receiver, how can the receiver know who is really calling or texting? They can’t. Everyone needs to understand that anything other than a face-to-face meeting or voice call from a familiar voice and/or phone number that has a long history of trust, must be treated skeptically from the very beginning, especially if they are in a position where finances or research is involved.

Educate employees that the person calling them claiming to be from the company’s bank may not be from the bank. The SMS text claiming to be from Google security may not really be Google security. A blog claiming to be from a respected security researcher may not be from a respected security researcher even if other people who you trust are vouching for them. “Hey, you can trust them because I trust them!” is a claim that has been proven wrong against hundreds of thousands of victims over the centuries.

US President Ronald Reagan is credited with first publicly using a well-known Russian proverb, “Trust, but verify.” That’s good advice for anyone involved in any transaction. If someone calls up claiming to be your new contact point out of the blue, reach out to the former contact person to verify. If the new person claims the former person has been laid off, call the former contact person’s boss. If an email arrives from a person you trust from their regular email address that you recognize, but they are claiming you are supposed to send money to a new bank, call that person on their previously documented phone number and verify. If someone calls you claiming to be from Microsoft and that they have discovered viruses on your computer, ask them if you can call the very public, well-known, on-the-Internet, Microsoft tech support number and get transferred to them. If they say no, hang up.

Create education to teach about long-term con phishing attempts and scams and how to defend against them - trust, but verify. You cannot trust anyone on a phone, text message or email to be who they are claiming without additional verification. Teach everyone to have a healthy level of skepticism about any new interaction that could potential compromise their device, network, or organization.

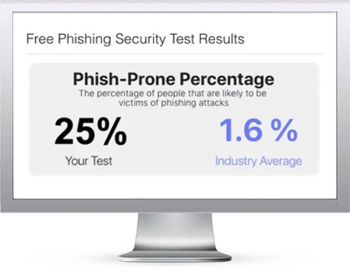

The vast majority of your security awareness training needs to be directed at educating people about the more common, popular types of phishing scams that we see everyday. But don’t forget to discuss the potential damage from long-term phishing scams every now and then during the year, especially with the types of people most likely to be targeted (e.g. accounts payable, HR, finance, senior management, researchers, etc.). You must make people in positions of great responsibility aware of these longer-term ploys.

Additionally, create policies that decrease the potential success of these long-term scams. For instance, create a policy that requires voice confirmation from a previously known resource at a previously documented phone number for any payment information changes. Go farther by mandating that email alone cannot be used for verification. This will make it less likely these types of scams will be to be successful. If you have researchers, create policies which require independent verification for any sharing of research and require that all newly installed software be inspected for malware.

I’m not going to kid you by saying that long-term phishing scams are easy to recognize or defeat. They are, by their very nature, tougher to recognize and beat than the single link or email scams. They are intended to be. But by creating and enforcing policies and security awareness training directed against such scams, you can decrease the risk that they are successful.

Really, the old Russian proverb needs to be more complete. It really should be: Educate, Trust, but Verify.

Here's how it works:

Here's how it works: