Assisting with the creation of spear phishing emails, cracking tools and verifying stolen credit cards, the existence of FraudGPT will only accelerate the frequency and efficiency of attacks.

Assisting with the creation of spear phishing emails, cracking tools and verifying stolen credit cards, the existence of FraudGPT will only accelerate the frequency and efficiency of attacks.

When ChatGPT became available to the public, I warned about its misuse by cybercriminals. Because of the existence of “ethical guardrails” built into tools like ChatGPT, there’s only so far a cybercriminal can use the platform. So, tools like WormGPT hit the dark web to fill in the gaps as an “ethics-free” tool.

Security researchers at Netenrich have identified a new AI tool, FraudGPT – a multi-purpose AI toolset. According to the ad for FraudGPT on the dark web, this tool can be used for:

- Writing malicious code

- Creating undetectable malware

- Creating phishing pages

- Creating hacking tools

- Writing scam pages and emails

- Finding vulnerabilities

- Finding cardable websites

- And more

So, for as little as about $142 a month, the novice cybercriminal has access to an AI platform that turns them into maybe not an expert, but certainly creates expert-level execution.

I see this as only the beginning, as there’s always a new face looking to get into the cyber crime game – and with tools like FraudGPT for a small fraction of what a single cyber attack could bring in, users interacting with email and the web need to be materially more vigilant against what we should all assume will be far more effective cyber attacks via social engineering – something accomplished through continual security awareness training.

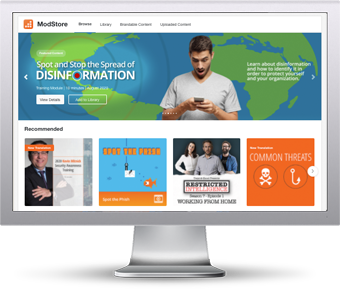

The ModStore Preview includes:

The ModStore Preview includes: