AI is going to allow better, faster, and more pervasive attacks.

AI is going to allow better, faster, and more pervasive attacks.

For a few years, if you attended one of my presentations involving AI, I would tell you all about AI and AI threats…perhaps even scare you a bit…and then tell you this, “AI attacks are coming, but how you are likely to be attacked this year doesn’t involve AI. It will be the same old attacks that have worked for decades.”

I always got lots of comforted smiles from those ending lines. But this year is different. This year, if you are successfully attacked, AI is likely to be involved. Starting now, AI is more than likely to be involved, and by next year…for sure…AI will be the main way you are attacked.

AI promises to solve many of humanity’s long-standing problems (e.g., diseases, traffic management, better weather prediction, etc.), improve productivity, and give us many inventions and solutions that were not easily achievable. Unfortunately, AI will also allow cyberattackers to be better at malicious hacking.

This article will discuss many of the ways AI will be used by attackers to “better” attack us. I’m not talking about things way, way in the future. I’m talking about improvements happening now that will become forevermore the way things are done, starting this year and definitely normalized by next.

Note: I’m not going to cover attacks against AI itself, such as data poisoning or jailbreaking LLMs.

The Most Common Cyberattack Types

First, a little important relevant cybersecurity history. Most cyberattackers employ two primary methods, social engineering and exploiting software and firmware vulnerabilities, to gain initial access to victims, their devices, and networks. There are several other methods (e.g., misconfigurations, eavesdropping, etc.) that a hacker or their malware program can use to gain initial access, but just two methods — social engineering and exploiting software and firmware vulnerabilities — account for the vast majority of attacks.

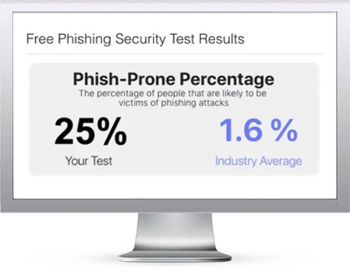

Social engineering is involved in 70%-90% of attacks, and exploited software and firmware vulnerabilities account for about a third. These two types of attacks account for 90%-99% of the cyber risks in most environments (especially if you include residential attacks). So, I’ll cover those attack types first.

Note: Mandiant gave the 33% figure in 2023. Anecdotally, it looks like it may have crept up to 40% over the last two years, mostly due to a few ransomware gangs specifically targeting particular appliance vulnerabilities at scale.

Social engineering is an act where a scammer fraudulently induces a potential victim to perform an action (e.g., provide confidential information, click on a rogue link, execute a trojan horse program, etc.) that is contrary to the victim’s self-interests. It’s a criminal con.

We’ve long known that the more information a scammer has about his potential target, the more he can use that information in the scam to convince the target to perform the requested action. Spear phishing is when a scammer is performing a phishing attack against a specific victim or group, often using learned information (instead of more general attacks with no specific information on the target included).

Barracuda Networks reported that although spear phishing only accounted for less than 0.1% of all email-based attacks, it accounted for 66% of successful compromises. That’s huge for a single root cause!

I’m sharing these important facts because AI is getting ready to significantly increase and improve personalized spear phishing and exploit attacks.

AI’s Impact on Social Engineering

AI-enabled social engineering bots will begin to do a lot of things to accomplish more successful social engineering, including:

- Better craft the fraudulent identity personas of the attackers (i.e., who they are claiming to be) to be more believable

- Better craft phishing messages, using better topics, better messages, and perfect grammar and language for the population being targeted

- Use personalized OSINT on the person who will be sent the spear phishing attack to craft a personalized spear phishing message that is more likely to be successful

- Use industry-specific vernacular when targeting particular industries

- Craft better responses and ongoing conversations when a target responds to the initial inquiry and asks questions

- Better enable fake employees to get jobs by using AI-enabled services to craft fake personas, perform well in interviews, etc. (already happening)

- Craft phishing messages more likely to bypass traditional anti-phishing defenses

- Use AI-enabled deepfake technologies to send fraudulent audio and video spear phishing messages to targets (more on this below)

- In general, more targeted, more successful social engineering

Let me expand on a few of these points.

The best and most successful hackers have always done OSINT research on their targets. The more they do, the better chance of success they achieve. What is going to change with social engineering AI bots is that the AI will do all the research and it will likely be better. The hacker will decide to target a particular company or organization, and the bot will scour the web, use OSINT tools, and locate all the possible employees for the targeted entity. Then, it will locate the professional and personal email addresses, professional and business phone numbers, work locations, hobbies, professional position, and any details of any current projects the employees are working on. Then the AI bot will craft what it thinks is the best-looking spear phish they can to get the employee to perform a requested harmful action. And it will be more successful than humans performing social engineering.

The terms I’m starting to see to describe AI-enabled information gathering for social engineering are hyper-personalized or hyper-realistic social engineering.

How do we know that AI-enabled social engineering bots will be more successful in engineering people? Because they already are.

Ever since AI LLM models appeared with OpenAI’s ChatGPT 2.0 in late 2022, social engineering experts have been testing and measuring social engineering AI bots against human talent. If you don’t know it, there have been various contests involving Human Risk Management (HRM) firms and consultants to see how well a human can do social engineering versus a social engineering AI bot.

Back in 2023, the human social engineers were still winning the contests. In 2024, the humans were still winning, but the AI bots were getting so close that sometimes it appeared as if the AI bot won, even if it didn’t. I remember hearing that an audience at one of the contests gave a standing ovation to the AI bot when it lost because they all thought the AI bot deserved to win. It was that close. When I heard that, I realized it was just a matter of time till the AI bots were winning.

Well, in 2025, the social engineering AI bots are winning.

It has been reported by several AI vs. human social engineering competitions now that the AIs are beating the humans, including this report. I’ve seen several demos of AI-enabled social engineering bots, and most people would not be able to tell the difference between the AI bot and a human being. In some of the demos, the AI-bot is still hesitating and giving an unnatural delay of a second or so before replying, but that delay gap is certainly going to disappear soon…forever.

The Rubicon has been passed. The AI is already better at social engineering and will only get better. Soon, no social engineering criminal will rely on anything other than AI to do social engineering. To rely on a human is to be less successful.

AI-Enabled Phishing

Most phishing is done by attackers using “phishing kits” or phishing-as-a-service software. The attacker isn’t a lone person doing each phishing attempt one-by-one. Nope. They use a tool, either a “phishing kit” or phishing-as-a-service productivity offering.

The phishing tool crafts the phishing message, sends it to the supplied mailing list, and may create and handle the websites and other content related to the phishing scam. The best phishing tools handle everything from beginning the scam to collecting and distributing the ill-gotten loot. It’s the rare hacker that does everything by hand.

Most phishing kits and services are now AI-enabled. Egress (a part of KnowBe4), reports that 82.6% of phishing emails were crafted using AI-enabled tools and that 82% of phishing tools mentioned AI in their advertising (yes, criminals advertise their tools and feature sets). By the end of the year, that figure will be close to 100%. Hacker tools not incorporating AI-enabled features will quickly be out of customers.

AI-Enabled Deepfakes

Anyone can get a picture of someone, along with 6-60 seconds of audio of that person, and craft a very realistic deepfake video and audio of that person saying or doing anything. You don’t have to be an AI deepfake expert. It won’t even cost you anything. It will take you longer to sign up for the free accounts on the deepfake sites than to create your first very realistic deepfake video. This has been possible for over a year.

If you haven’t done your first deepfake, here’s what I recommend: Step-by-Step To Creating Your First Realistic Deepfake Video in a Few Minutes

Deepfakes allow bad people to create fake videos, fake audio, fake pictures, and push them as real. It allows scammers to do fake phone calls, leave fake voice mail messages, and do fake Zoom calls.

We used to warn people to be wary of every email they receive asking them to do something strange. Then we had to watch for unexpected SMS and WhatsApp messages. Now, we have to add any unexpected audio or video digital connection, asking them to do something strange.

But it’s worse than that.

Real-Time Deepfakes

Today, anyone can participate in a real-time online conversation posing as anyone else. They can use a cloud- or laptop-based service or software that allows them to morph, in real-time, to anyone else, both in what they look like and how they sound. Anyone can mimic anyone else.

Check out my co-worker, Perry Carpenter’s podcast on the subject: (related image below).

Anyone can create a real-time video and audio feed pretending to be anyone else. The synthetic being (that’s what we call deepfakes) mimics whatever the creator does in real-time without any recognizable delay. If the creator speaks, the synthetic entity speaks, but using either the creator’s voice or their own simulated voice. Celebrity faces and voices can be easily mimicked. If the creator moves, the synthetic entity moves the same. It’s almost unbelievable to watch.

I often create real-time deepfakes of people and then call them and let them speak to themselves. It never fails to impress. Cool party trick. Soon to be a very common scammer tactic.

It gets worse.

Anyone can change from one fake persona into another fake identity persona as easily and quickly as clicking their mouse. I’m Taylor Swift now, I’m Nicholas Cage a second later. I’m Liam Neeson, a third second later.

The first time Perry and my co-workers saw this technology, it was being sold as a service on a Chinese website about six months ago. Then we saw it as a free cloud-based service by a US cybersecurity professional a month after that. Now, anyone can download software to their high-end, multi-GPU-enabled laptop and do the same thing. It’s click, click, click to become someone else…in real-time.

It gets worse.

Then Perry discovered a “plug-in” that allowed these real-time deepfakes to participate in a Zoom call (image below).

Perry was initially hesitant about sharing the name of the tool he used to craft fake Zoom identities. He was worried about people using the tool to do scams. And he’s right. It will absolutely be used to scam people. Millions of people. Millions of victims. It’s too late. The tool exists. It will be used to abuse.

It gets worse.

Real-Time AI-Driven Interactive Conversations

It's now possible for someone to create a real-time, AI-driven interactive chatbot that can participate in conversations so realistically that most people would not notice it’s a bot. I’ve seen several demos of this technology, first from Perry during KnowBe4’s 2025 annual KB4-CON convention in April (image from the presentation below).

Perry created his own private chatbot and then feeds it a multi-page “prompt” that turns it into a malicious chatbot looking to socially engineer people out of private information or to simulate fake kidnapping ransom requests. He often gives them celebrity personas like Taylor Swift, or, just to humor the audience, an evil Santa persona.

Then he has the chatbot converse in real-time with someone, often himself, in the demos. And if you’ve ever seen one like it, there’s no way you cannot be scared by what you are seeing. It’s an AI-enabled chatbot, sounding and acting human, and realistically participating in a conversation with a human in a way that is 100% human-sounding. I’ve seen busy, over-stimulated audience members, one-by-one, stop what they are doing, stop looking at their screens and cell phones, and focus only on what Perry is showing them. It’s an attention getter. Everyone knows they are seeing the future of social engineering.

You are seeing and hearing Taylor Swift social engineering a (simulated) victim out of their personal and company information. You even feel for the bot as it explains the unusual and unexpected circumstances it’s going through as a tech support person that requires that the victim provide the requested information. I’ve seen audiences verbally shriek as Perry’s evil Santa persona starts cussing and demanding a ransom payment in order not to cut up the (simulated) kidnapping victim.

It’s an emotional experience. And that is exactly what AI is bringing to the table…on purpose.

What the audience doesn’t know is that the future they are seeing is only months away!

Perry has since done similar demos of the technology at many top conferences, including RSA (where it was a top-rated presentation) and to top media broadcasts, like CNN. It never fails to impress.

Anyone can do it. Although the real-time AI-enabled chatbots are still a bit cutting edge and take more work than the real-time chatbots that are just mimicking what the creator says and does. But within a few months, anyone can have one. I’ve seen dozens of demos of the same technology being used in both attack and defense scenarios now.

Faster, Better Exploitation

We had over 40,200 separate publicly announced vulnerabilities last year. In 2023, more exploited vulnerabilities were from zero-day vulnerabilities (vulnerabilities not generally known by the public and/or for which a vendor patch was not available) used by bad guys to exploit people and companies than non-zero-days for the first time in history.

We are going to see more new vulnerabilities discovered, more zero-days used, and more and faster finding of exploitable vulnerabilities in the places where they exist.

Humans have long used software to find new bugs. When I did bug hunting (for 20 years), I used to find half the bugs and the software found half the bugs. A lot of times the vulnerability-hunting software would find something weird…but not a true exploit…but then my human investigating found something else related that was a real exploit. So, half credit for the software, half credit for the human. I think a lot of bug hunters would tell you that’s similar to their experience.

What is changing is that humans are increasingly using AI bots with more autonomy to find more bugs. As HackerOne reported recently, many “hackbots” now routinely find new vulnerabilities. About 20% of their bug finders report using AI-enabled hackbots and that percentage is likely to be 100% very soon. Expect the current 40.2K number of public bugs found to explode next year. Expect the number of zero-days to explode (right now they number near 100 a year).

When patches are released, hackers will use AI to reverse engineer them faster and make widely used exploit code. AI-enabled bots will find more unpatched things and exploit them more quickly. Years ago, the conventional wisdom was that most companies could wait a month or more to test and patch their systems. It’s already down to a week or so, even without AI-enabled bots scouring the web looking for vulnerable hosts. I can easily see the patching window shrinking to days or less than a day.

I think it’s very easy to see a day coming soon when the defenders only have hours to minutes to patch their most vulnerable hosts. Defenders will have to use AI to patch their systems. It will be the only way to patch quicker than the vulnerability-hunting AI-bots looking at their systems.

AI-enabled bots will be able to move more quickly from the initial access entry to the desired target (i.e., A to Z movement). We already have open source systems like Bloodhound allow attackers to map an Active Directory environment to do this. I’ve seen internal red teams use Bloodhound to do point and click exploitation, to allow the compromise of the intended target in seconds…all automated.

AI-enabled vulnerability hunting tools will absolutely make this sort of thing just the way hacking is done. Why hack hard if you can hack easy?

Agentic AI Malware

Agentic AI malware is going to do everything a hacker could do (e.g., OSINT, initial access, value extraction, etc.) faster and better than a human attacker. We already have malware that steals people’s faces, does an AI-enabled deepfake, and then steals all their money from banks that require facial recognition as authentication. That’s old news and works with any biometric trait (e.g., face, fingerprint, retina, voice, etc.).

What I’m talking about today is agentic AI malware that does the full suite of hacking starting with finding a potential victim, doing research, figuring out how to break in (e.g., social engineering or vulnerability exploitation, etc.), stealing something of value, and returning it to the owners of the AI bot. Here’s a graphical representation of agentic AI-enabled malware:

I’ve covered this topic in more detail here: Agentic AI Ransomware Is On Its Way.

There has always been software and malware that have done similar, earlier-generation hacking before. What’s changed is that agentic AI can do it faster, better, and with specificity. A controlling hacker will be able to say something like, “I want you to break into human risk management companies that make a $100 million or more in annual revenues and steal the money in their primary payroll bank account.” And let it go. It will research which organizations meet that criteria, research how to break into them, and steal the money. It would determine what it needed to do to maximize the value of the theft. All the hacker did was give it a starting prompt, and the hacking agentic bot did all the rest.

Disinformation

Disinformation is huge these days. So many entities want to create and spread fake news. Even as best as I try, I sometimes fall victim to fake news that I unsuspectingly pass along. The best fake news is the fake news passed along by larger, more trusted news sources. AI is already helping to better pass along and spread disinformation and sometimes it’s not even to humans.

We’ve already tracked AI-created fake news stories that used API and other methods to reach out to other AIs and new sources to better spread disinformation. Yes, you read that right. Sometimes AIs reach out to other AIs to spread disinformation.

In this particular example, a Russian propaganda platform used AI-created articles to flood the Internet with 3.6 million disinformation articles, which various legitimate new source AIs picked up and republished. It’s AI versus AI to humans. Widespread disinformation was never so easy.

This Is All Happening Now

Everything I talked about above is already happening or is less than a year away. Most of it will be common and mainstream by the end of this year and into the beginning of next year.

How do I know this?

Well, I’m a big fan of history, and the best indicator of future behavior is past behavior. And so far, when AI researchers figure out a way that AI can be used to do something malicious, it’s about 6 to 12 months before it ends up being put into mainstream malware and social engineering phishing tools. That makes sense. It takes a while before the latest developments in technology can become commonly used in tools and programs, good and bad. So far, every AI-enabled development that can be abused by the bad guys has eventually ended up in a hacking tool, and within a year, nearly every related hacking tool. Expect the same here with the technologies discussed above.

Your Defenses

If this future attack scenario initially seems bleak, remember that the good guys invented AI (in 1955, no less), the good guys are the ones improving AI, and the good guys are spending far more and using AI far better than the bad people. For once, the good guys are likely to have a cybersecurity defense ecosystem that is ahead of the bad guys…or at least the opportunity for that to happen.

This is not an asymmetric cyberwar where only the bad guys use AI. No, quite the opposite. Already every cybersecurity company is already using AI, and soon agentic AI, to improve their products to better protect their customers. KnowBe4 has been using AI for over seven years to improve their products, and our commitment to using AI and agentic AI is stronger than ever. It’s not an exaggeration to say that nearly every daily meeting we have involves talking about how AI can help us at our jobs and at better defending our customers.

We already have evidence to support that our AI-enabled products and agents are better at helping to secure our customers. Not just hoping that is true but seeing real, objective customer evidence of it.

Cybersecurity defenders will be creating a flood of good AI-enabled agentic defenses, including threat hunters, patchers, and bots that find and fix misconfigurations. Simulated social engineering test bots will be conducted against users designed specifically for their exact weaknesses and specific training needs. Organizations will release them into their environment and tell them to defend the environment against the malicious AI bots. They will release threat-hunting bots that proactively seek and destroy the bad bots before they can do harm. The good guy bots will fight the bad guy bots, and the best algorithms will win.

The good guys will have additional help from a stronger, more secure internet infrastructure that makes it harder for bad guys and bots to hide. We will not always be living in the days of the Wild Wild West internet that we live in today. The infrastructure will improve and become more secure. The world’s very best agentic AI algorithm creators, who will design the very best defenses, will go work for cybersecurity vendors and organizations. The math whizzes and algorithm creators (the “algos”), used to work for Wall Street out of college. Now, they will work for Main Street, making the world safer.

And for the first time in my 36-year career, I see real hope that our future cybersecurity world will be safer than the one we have today. So, where some see only bleakness, I see light and hope. The good guys will win!

So spread the word…AI attacks are here and going to be more common from here on out. AI-enabled attacks will be faster, better, and more pervasive. But we will be using our AI to fight them better.

Here's how it works:

Here's how it works: