Artificial intelligence (AI) is revolutionizing most, if not all, industries worldwide. AI systems use complex algorithms and large datasets to analyze information, make predictions and adjust to new scenarios through machine learning – enabling them to improve over time without being explicitly programmed for every task.

Artificial intelligence (AI) is revolutionizing most, if not all, industries worldwide. AI systems use complex algorithms and large datasets to analyze information, make predictions and adjust to new scenarios through machine learning – enabling them to improve over time without being explicitly programmed for every task.

By performing complex tasks that previous technologies couldn't handle, AI enhances productivity, streamlines decision making and opens up innovative solutions that benefit us in many aspects of our daily work, such as automating routine tasks or optimizing business processes.

Despite the significant benefits AI brings, it also raises pressing ethical concerns. As we adopt more AI-powered systems, issues related to privacy, algorithmic bias, transparency and the potential misuse of the technology have come to the forefront. It's crucial for businesses and policymakers to understand and address the ethical, legal and security implications related to this fast-changing technology to ensure its responsible use.

AI in IT Security

AI is transforming the landscape of IT security by enhancing the ability to detect and mitigate threats in real time. AI surpasses human capabilities by learning from vast datasets and identifying real-time patterns. This allows AI systems to rapidly detect and neutralize cyber threats by predicting vulnerabilities and automating defensive measures, safeguarding users from data breaches and malicious attacks.

However, this same technology can also be weaponized by cybercriminals, making it a double-edged sword.

Attackers are leveraging AI to launch highly targeted phishing campaigns, develop nearly undetectable malicious software and manipulate information for financial gain. For instance, research by McAfee revealed that 77% of victims targeted by AI-driven voice cloning scams lost money. In these AI voice cloning scams, cybercriminals cloned the voices of victims’ loved ones, such as partners, friends or family members – all to impersonate them and request money.

Considering that many of us use voice notes and post our voices online regularly, this information is easy to come by.

Now, think of the data available to AI. The more data AI systems can access, the more accurate and efficient they become. This data-centric approach however raises the question of how the data is being collected and used.

Ethical Concerns

By examining the flow and use of personal information, we can consider the following ethical concepts:

- Transparency: Being open and clear about how AI systems work. Users of the system should know what personal data is being collected, how it will be used, and who will have access to it.

- Fairness: AI's reliance on existing datasets can introduce biases, leading to discriminatory outcomes in areas like hiring, loan approvals or surveillance. Users should be able to challenge these decisions.

- Avoiding Harm: Consider potential risks and misuse of AI whether physical, psychological or social. Frameworks, policies and regulations are in place to ensure that AI systems are designed to handle data responsibly.

- Accountability: Clearly defining who is responsible for AI actions and decisions and holding them accountable, whether it's the developer, the organization using the system or the AI itself.

- Privacy: Protecting data and right to privacy when using AI systems. While encryption and access controls are standard, the massive volume of data analyzed by AI can expose sensitive information to risks. Under certain laws, users must explicitly consent to data processing.

While organizations and governments are consistently working towards better AI governance, we all play a vital role in ensuring the ethical use of AI in our daily lives. Here’s how you can protect yourself:

- Stay informed: Familiarize yourself with the AI systems you interact with and understand what data they collect and how they make decisions.

- Review privacy policies: Before using any AI-driven service, carefully review the privacy policies to ensure that your data is handled in compliance with relevant regulations.

- Exercise your rights: Know your rights under data protection laws. If you believe an AI system is mishandling your data or making unfair decisions, you have the legal right to challenge it.

- Demand transparency: Push for companies to disclose how their AI systems work, especially regarding data collection, decision-making processes and the use of personal information.

- Be wary: As AI scams and attacks evolve, always verify any requests that require an immediate or pressing action from you. And always get your news from reputable news sources.

As AI continues to revolutionize the digital world, the ethical, security and compliance challenges will grow and evolve. Understanding the challenges and actively engaging with AI platforms responsibly can help ensure that AI remains an ethical and secure tool.

We all contribute to the future of AI and the innovations we create from it; let's do so in a safe and responsible way. The future of AI is boundless in its potential; let's not wait for governance and take ownership to teach it to be ethical and use it for good.

This blog is co-written by Sanet Kilian, Snr. director of content at KnowBe4 and Anna Collard, SVP Content Strategy & Evangelist Africa

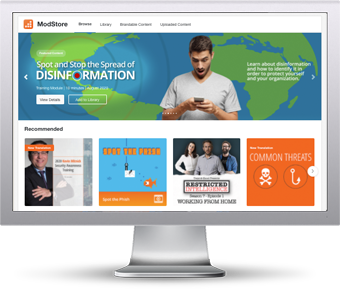

The ModStore Preview includes:

The ModStore Preview includes: